The Model Context Protocol (MCP) is an open-source standard for connecting AI applications to external systems. Think of it as a universal translator that enables AI models like Claude and ChatGPT to seamlessly interact with the vast world of software and data, from your personal calendar to complex enterprise databases.

What is MCP and Why Do We Need It?

At its core, MCP is a standardized communication layer that allows AI to access and use external tools, data, and workflows. This is a crucial capability because large language models (LLMs) are inherently limited by their training data; their knowledge is static and they can't interact with the real world. MCP breaks down these barriers, enabling an AI assistant to, for example, access your Google Calendar to schedule a meeting, pull data from a Salesforce report, or even generate a web app from a Figma design.

Before MCP, connecting an AI model to a new tool required building a custom integration, a time-consuming and inefficient process. MCP provides a standardized "plug-and-play" framework, much like USB-C for electronics, that simplifies development and fosters a rich ecosystem of interconnected AI applications.

The Evolution from Tool Calling to a Standardized Protocol

The idea of AI using external tools isn't new. OpenAI introduced "function calling" in 2023, which was a significant step in allowing models to perform specific tasks. However, these early solutions were often proprietary and lacked a unified standard, leading to fragmentation.

Recognizing this challenge, Anthropic introduced MCP in November 2024 as an open standard to create a universal language for AI-tool interaction. The protocol was quickly adopted by major players in the AI space, including OpenAI and Google DeepMind, cementing its status as the industry standard. Today, MCP is supported by a growing number of platforms and has SDKs available for most major programming languages, including Python, TypeScript, and Java.

The Context Overload Problem and Innovative Solutions

As the number of tools connected to an AI agent grows, a new problem emerges: context overload. When an AI has access to hundreds or even thousands of tools, simply loading all the tool descriptions into the model's context window can consume a significant amount of resources, increasing costs and latency before the user has even submitted a prompt. This can also lead to "tool hallucination," where the model struggles to choose the correct tool from an overwhelming number of options.

To address this, the AI community is developing innovative design patterns. Two notable approaches are Klavis AI's "Progressive Discovery" and Anthropic's "Code Execution."

Klavis AI's Progressive Discovery

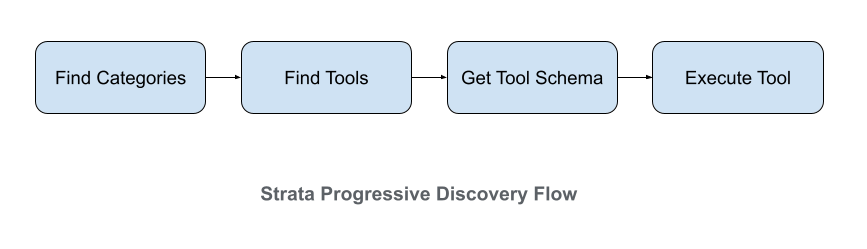

Klavis AI tackles the context overload problem with a "less is more" philosophy through its Strata product. Instead of presenting all available tools at once, Strata guides the AI agent through a logical discovery process:

- Intent Recognition: The agent first identifies the user's high-level intent.

- Category Navigation: Based on the intent, the agent is shown relevant categories of tools (e.g., "GitHub" for code-related tasks).

- Action Selection: The agent then explores the actions available within a selected category.

- API Execution: Only when a specific action is chosen is the full tool definition loaded into the context.

This progressive disclosure method dramatically reduces the initial token load, minimizes confusion for the model, and allows it to scale to any number of tools without overwhelming the context window.

Anthropic's Code Execution

Anthropic has proposed a different, yet equally powerful, solution: having the AI agent write code to interact with tools instead of making direct tool calls. In this "Code Mode," MCP servers are presented as code APIs. The agent can then write a script to perform a series of actions, such as fetching data from one tool, processing it, and then sending it to another.

This approach has several advantages:

- Context Efficiency: The agent only needs to load the definitions for the tools it actively uses in its code.

- Data Privacy: Intermediate results from tool calls remain within the execution environment and don't need to be passed back and forth through the model's context, which is particularly useful for sensitive data.

- Powerful Control Flow: The agent can use loops, conditionals, and other programming constructs to perform complex, multi-step tasks more efficiently.

The Future of MCP

MCP is more than just a technical specification; it's a foundational piece of the infrastructure for the next generation of AI agents. As models become more capable, their ability to interact with the digital world will be a key differentiator. We can expect to see the MCP ecosystem continue to grow, with more sophisticated tools and more intelligent methods for tool discovery and orchestration.

The ongoing innovation in this space, from progressive discovery to code execution, highlights a clear trend: the future of AI is not just about bigger models, but about smarter, more efficient ways of connecting them to the world. As developers, understanding and leveraging MCP will be essential for building the powerful, context-aware AI applications of tomorrow.

FAQs

1. What is the main difference between MCP and older function-calling APIs?

The main difference is standardization. While function-calling APIs were often specific to a particular model or vendor, MCP is an open standard adopted by major AI companies, ensuring interoperability and a more unified developer experience.

2. Is context overload a serious problem for AI developers?

Yes, as AI agents are given access to more tools, the size of the tool definitions can overwhelm the model's context window. This leads to higher costs, increased latency, and can even cause the model to make mistakes or "hallucinate" the wrong tool for a task.

3. Do I need to choose between Progressive Discovery and Code Execution?

Not necessarily. These are design patterns that can be used in different scenarios. Progressive Discovery is excellent for platforms with a large and diverse set of tools, while Code Execution is particularly powerful for complex, data-intensive workflows. They represent different approaches to solving the same core problem of context management.