AI agents are moving beyond simple chatbots to become powerful tools that can autonomously reason, plan, and execute complex tasks. But connecting these agents to the real world—integrating APIs and managing complex workflows—remains a major development hurdle.

This guide introduces a more efficient solution. By combining Langflow, an intuitive visual builder for AI, with the Model Context Protocol (MCP), a universal standard for tool integration, you can streamline the entire process. We'll show you how this powerful stack simplifies agent development, allowing you to build sophisticated AI applications faster.

First, What Exactly is an AI Agent?

Before we dive into the how, let's clarify the what. An AI agent is a software program designed to perceive its environment, make decisions, and take actions to achieve specific goals. Unlike traditional programs that follow a rigid set of instructions, agents possess a degree of autonomy.

Key characteristics of an AI agent include:

| Feature | Description |

|---|---|

| Autonomy | Operates independently without constant human intervention to achieve its goals. |

| Reasoning | Utilizes logic and available information to make informed decisions and solve problems. |

| Planning | Decomposes complex goals into smaller, actionable sub-tasks and executes them sequentially. |

| Tool Use | Interacts with external tools, APIs, and data sources to gather information and perform actions. |

Think of an agent as an intelligent assistant that can not only answer questions but also perform multi-step operations like scheduling meetings, analyzing data, or even writing and debugging code

Enter Langflow: Visualizing AI Workflows

Building an AI agent from scratch can be a daunting task, often involving boilerplate code and complex integrations. Langflow is an open-source visual framework designed to simplify this process. It provides an intuitive drag-and-drop interface that allows developers to build, prototype, and deploy AI applications with remarkable speed.

With Langflow, you can:

- Visually Orchestrate Components: Connect Large Language Models (LLMs), vector databases, APIs, and custom Python code as nodes in a flow chart. This visual approach makes it easy to understand the logic and data flow of your application.

- Rapid Prototyping: Quickly experiment with different models, prompts, and tool configurations without rewriting significant amounts of code.

- Leverage Pre-built Components: Langflow comes with a rich library of integrations for popular LLMs, data stores, and services, allowing you to focus on the unique logic of your agent.

- Deploy as an API: Once your flow is complete, you can instantly expose it as an API, making it easy to integrate into your existing applications.

Langflow democratizes AI development by abstracting away the low-level complexities, empowering developers to build sophisticated agentic systems more efficiently.

MCP: The Universal Translator for AI Agents

An agent's true power lies in its ability to interact with the outside world. This is where the Model Context Protocol (MCP) comes in. MCP is an open standard that acts as a universal "API of APIs" for AI, enabling seamless communication between an LLM and external tools and data sources.

Think of MCP as a standardized USB-C port for your AI agent. Instead of building custom, one-off integrations for every API you want to use, MCP provides a consistent protocol for an agent to discover and invoke tools. This solves two major problems in agent development:

- Reduces Integration Complexity: No more writing bespoke connector code for every single service. An MCP server exposes its capabilities in a standardized way that any MCP-compliant agent can understand.

- Solves Tool Overload: As agents become more powerful, they might need access to hundreds or even thousands of tools. Presenting all these options to an LLM at once can overwhelm its context window and lead to poor performance.

Klavis Strata: Taming Tool Overload with Progressive Discovery

The challenge of tool overload is where solutions like Klavis Strata shine. Strata is an intelligent MCP server that, instead of bombarding the agent with a massive list of tools, guides it through a process of progressive discovery.

Here's how it works:

- Intent Recognition: The agent first identifies the high-level intent of the user's request (e.g., "manage my calendar").

- Category Navigation: Strata presents broad categories of tools related to that intent (e.g., "scheduling," "events," "contacts").

- Action Selection: Once the agent selects a category, Strata reveals the specific actions available within it (e.g.,

create_event,find_available_times). - Execution: Only when a specific action is chosen does Strata provide the detailed API schema for execution.

This approach has been shown to significantly improve performance. For instance, Klavis AI's internal benchmarks show that Strata achieves a +15.2% higher pass@1 rate against the official GitHub MCP server by preventing decision paralysis and optimizing context usage.

By integrating Strata into your Langflow agent, you can equip it to handle complex, multi-app workflows with a level of reliability that was previously difficult to achieve.

Step-by-Step Guide: Building Your Agent with Langflow and MCP

Now, let's outline the steps to build your own powerful AI agent using these technologies. In this guide, we'll use the Klavis Strata MCP server, a production ready hosted MCP server with 50+ pre-integrated applications.

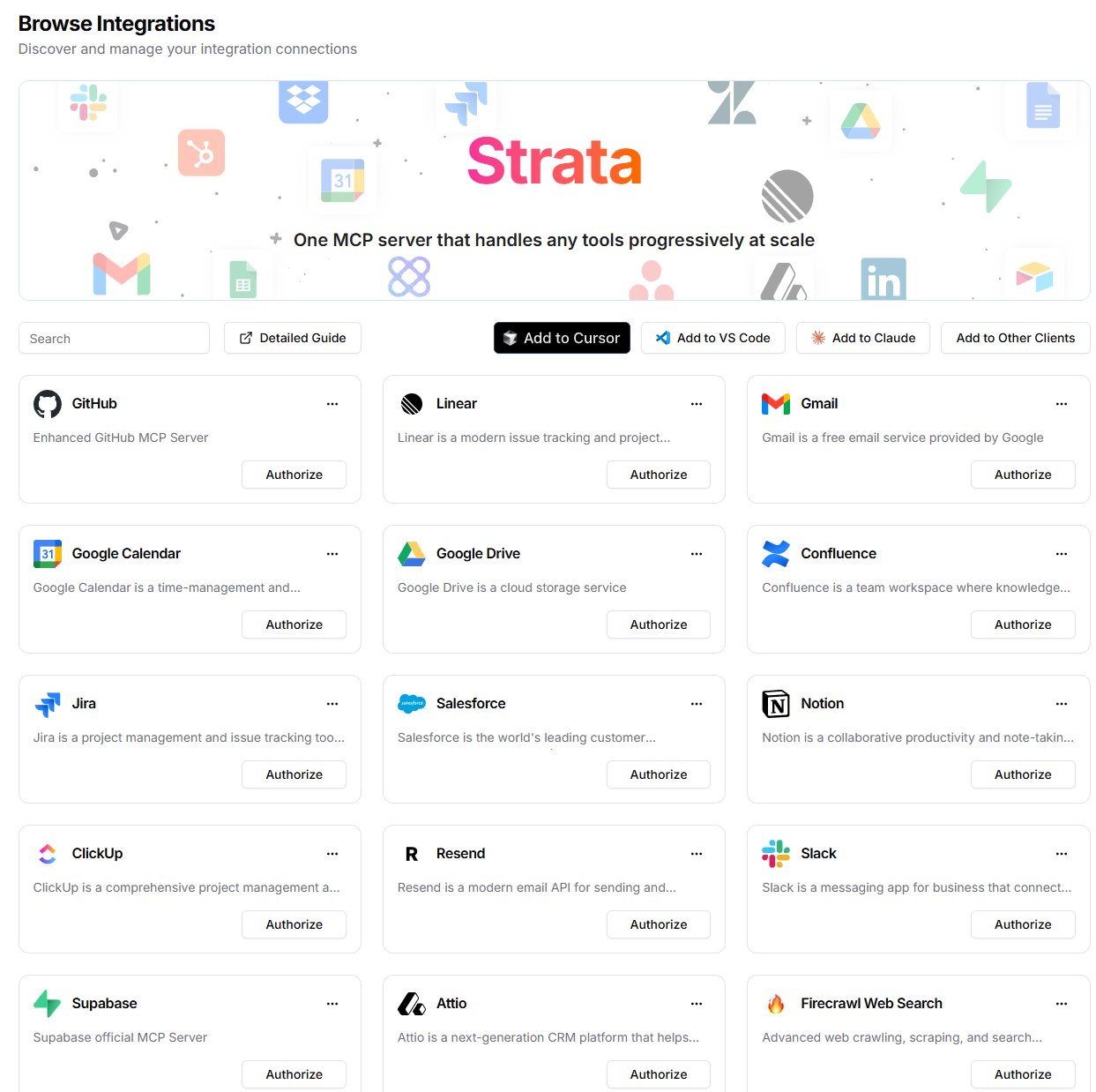

Step 1: Setup Klavis Strata MCP

Login to your Klavis AI account. You will see there are a bunch of applications available.

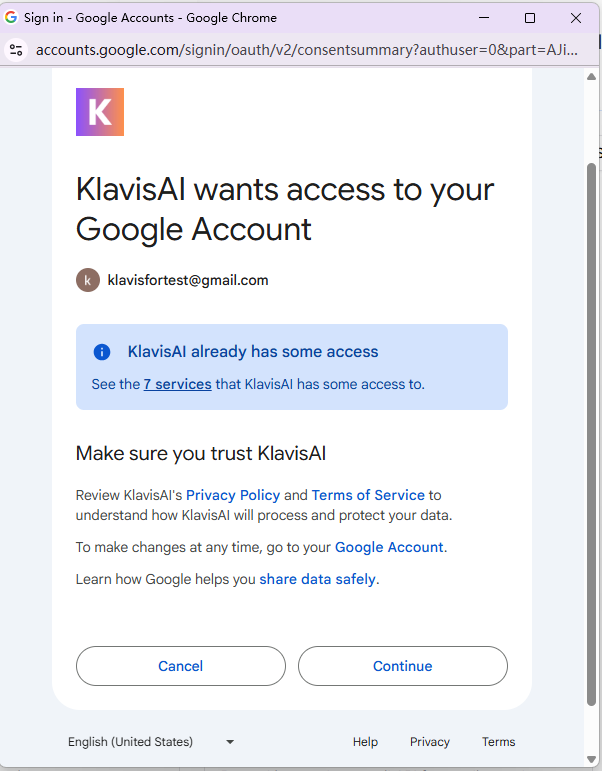

Find the applications you want to integrate with. Click the Authorize button to authorize Klavis Strata to access these applications on your behalf. Here we use Gmail as an examples.

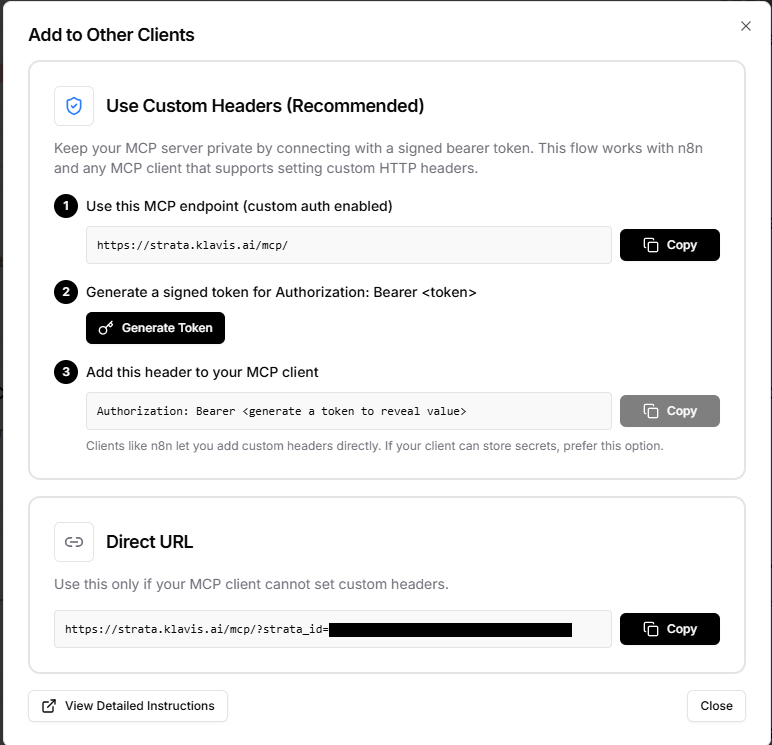

After authorization, click Add to Other Clients. Now you have the url of the Strata MCP that can interact with the applications you just authorized.

Step 2: Create a Flow in Langflow

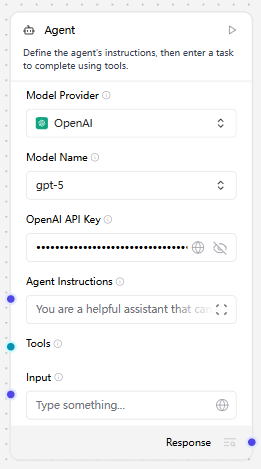

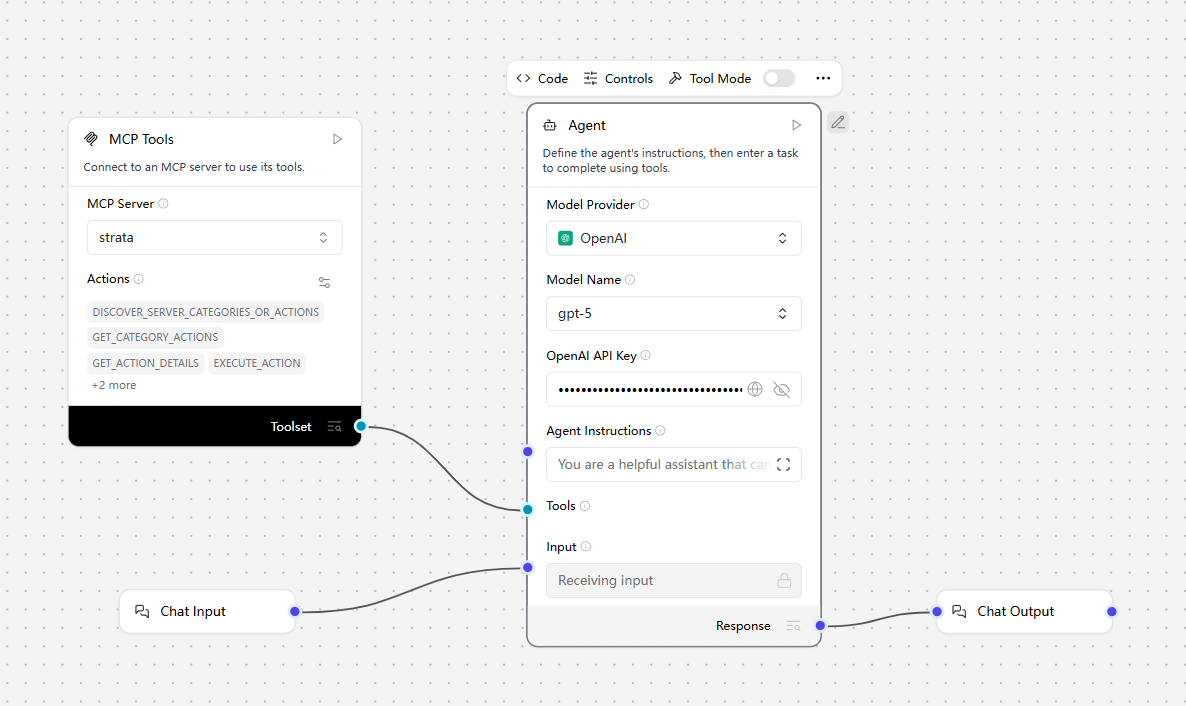

Open the Langflow. We create a blank flow. In the flow, we add an Agent component. Choose the model you want for the agent. Here we use gpt-5 as an example.

Step 3: Config the MCP Server in Langflow

Now, we need to add the Strata MCP in Langflow. Click Add MCP server in MCP panel, and paste the following json config. Replace <MCP_SERVER_URL> with the MCP server url you got from Step 1.

{

"mcpServers": {

"strata": {

"command": "uvx",

"args": [

"mcp-proxy",

"--transport",

"streamablehttp",

"<MCP_SERVER_URL>"

]

}

}

}

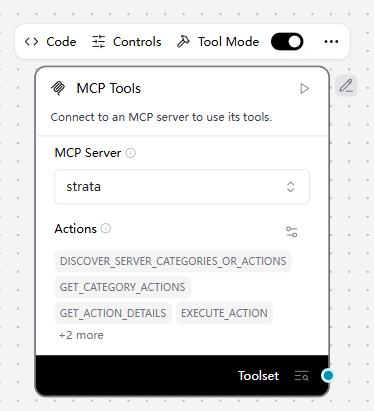

Then add the strata MCP component we just added to the flow. Toggle the tool mode for the MCP component.

Step 4: Put Everything Together

We add Chat Input and Chat Output components to the flow. Connect all the components as shown below.

Step 5: Verify

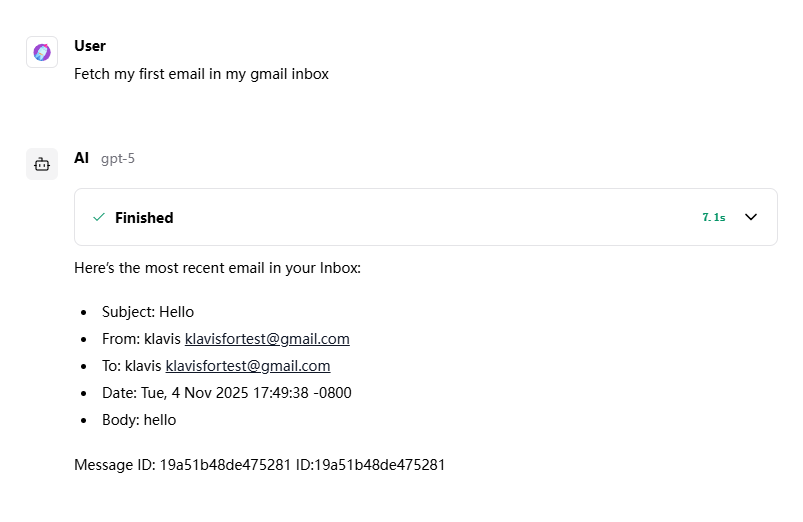

We can test our agent in the Langflow playground. Send a test query to the agent:

Fetch my first email in my gmail inbox.

And we can see the agent is working as expected.

Next Steps

You can further enhance your agent by adding more functionalities, integrating additional applications through Strata MCP servers, or customizing the flow to suit your specific use cases. You can also use API to build your AI agent.

Check Langflow's official documentation and Klavis AI's MCP documentation for more advanced features and configurations.

Conclusion

The combination of Langflow's visual development environment and the standardized power of the Model Context Protocol marks a significant leap forward for AI application developers. By abstracting away complexity and providing intelligent solutions for tool management, technologies like Langflow and Klavis Strata are making it easier than ever to build truly autonomous and capable AI agents.

Frequently Asked Questions (FAQs)

Q1: Do I need to be an expert coder to use Langflow? No, Langflow is a low-code tool designed to be accessible to developers of all skill levels. While you can extend its functionality with Python, the core workflow is managed through a visual drag-and-drop interface.

Q2: Is MCP compatible with popular agent frameworks like LangChain or LlamaIndex? Yes, absolutely. MCP is an interoperable standard designed to work across the ecosystem. You can create custom tool wrappers within frameworks like LangChain or LlamaIndex to communicate with any MCP server. This allows you to leverage the rich agentic capabilities of these frameworks while benefiting from the standardized tool integration and discovery that MCP provides.

Q3: Can I use my own custom tools with an MCP server? Absolutely. You can create your own MCP server to expose any private APIs or custom functions to your agent. Solutions like Klavis AI also offer open-source options to help you get started with self-hosting.