The Short Version: Give Your AI Access to Your Live Data

Large Language Models (LLMs) are powerful reasoning engines, but they are isolated from the context of your actual business operations. To build truly intelligent, autonomous features, you must connect your AI to your application's source of truth. For a rapidly growing number of developers, that source of truth is Supabase, the open source Postgres-based backend.

This guide explores why bridging the gap between natural language and your database infrastructure is a paradigm shift for application development, and walks through a secure, scalable way to implement it today.

Why Connect an LLM? Moving Beyond the Dashboard

Integrating Supabase with an LLM isn't just about creating a novel chatbot; it fundamentally changes how your team and your end-users interact with data and infrastructure. It shifts operations from manual, context-heavy tasks performed in a dashboard or CLI to intent-based automation.

By giving an AI agent secure access to Supabase tools, you democratize data access and accelerate workflows.

Here is a comparison of the traditional workflow versus an AI-augmented approach:

| Aspect | Manual Supabase Workflow | AI-Assisted Workflow |

|---|---|---|

| Data Retrieval | Requires knowing SQL, understanding the specific schema, and manually executing queries. | Users ask questions in plain English. The AI handles schema discovery and SQL generation. |

| Accessibility | Limited to developers, DBAs, or users with access to BI tools. | Accessible to non-technical stakeholders (Support, Marketing, PMs) via chat interfaces. |

| Infrastructure Ops | Navigating the Supabase dashboard or using the CLI to branch, migrate, or manage settings. | Executed via natural language commands (e.g., "Create a staging branch for PR #42"). |

| Complex Workflows | Human must chain tasks together (e.g., query data -> format it -> call another API). | AI agents can autonomously chain Supabase tools with other API calls to complete complex goals. |

| Vector Search (RAG) | Requires manually generating embeddings, writing complex pgvector queries, and managing context. | The AI handles the entire Retrieval-Augmented Generation (RAG) pipeline dynamically based on user intent. |

Quickstart Guide

Here we demonstrate how to create the Supabase MCP and connect to a MCP client via Klavis UI.

- Log in to your Klavis account and navigate to the home section.

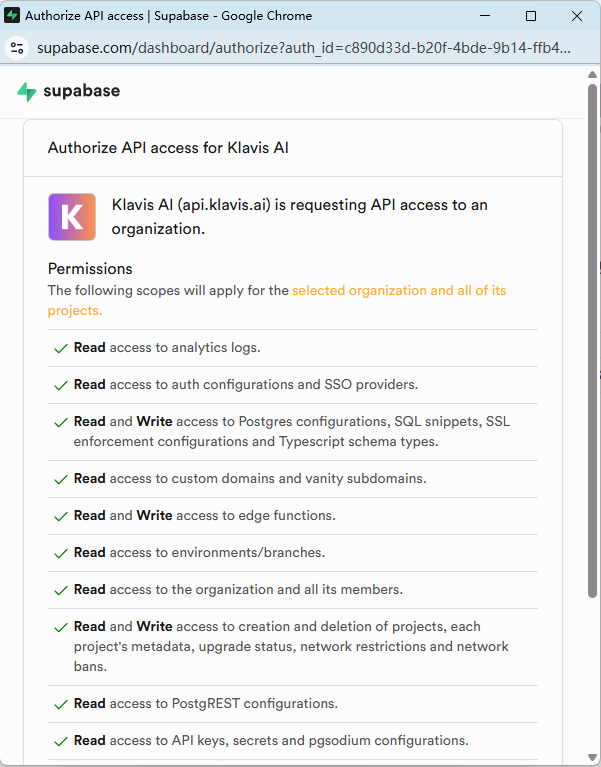

- Use the search box to find "Supabase" and click Authorize button.

- Follow the OAuth flow to authorize Klavis to access your Supabase project. After successful authorization, it should showed "Authorized" status.

- Add to your favorite MCPs client by clicking Add to XXX button.

Then your Supabase is already connected to LLMs via Klavis MCP! You can use the MCP client to interact with your Supabase database.

Tips: You can also create Strata MCP by API, check the documentation.

Real Examples Showcase

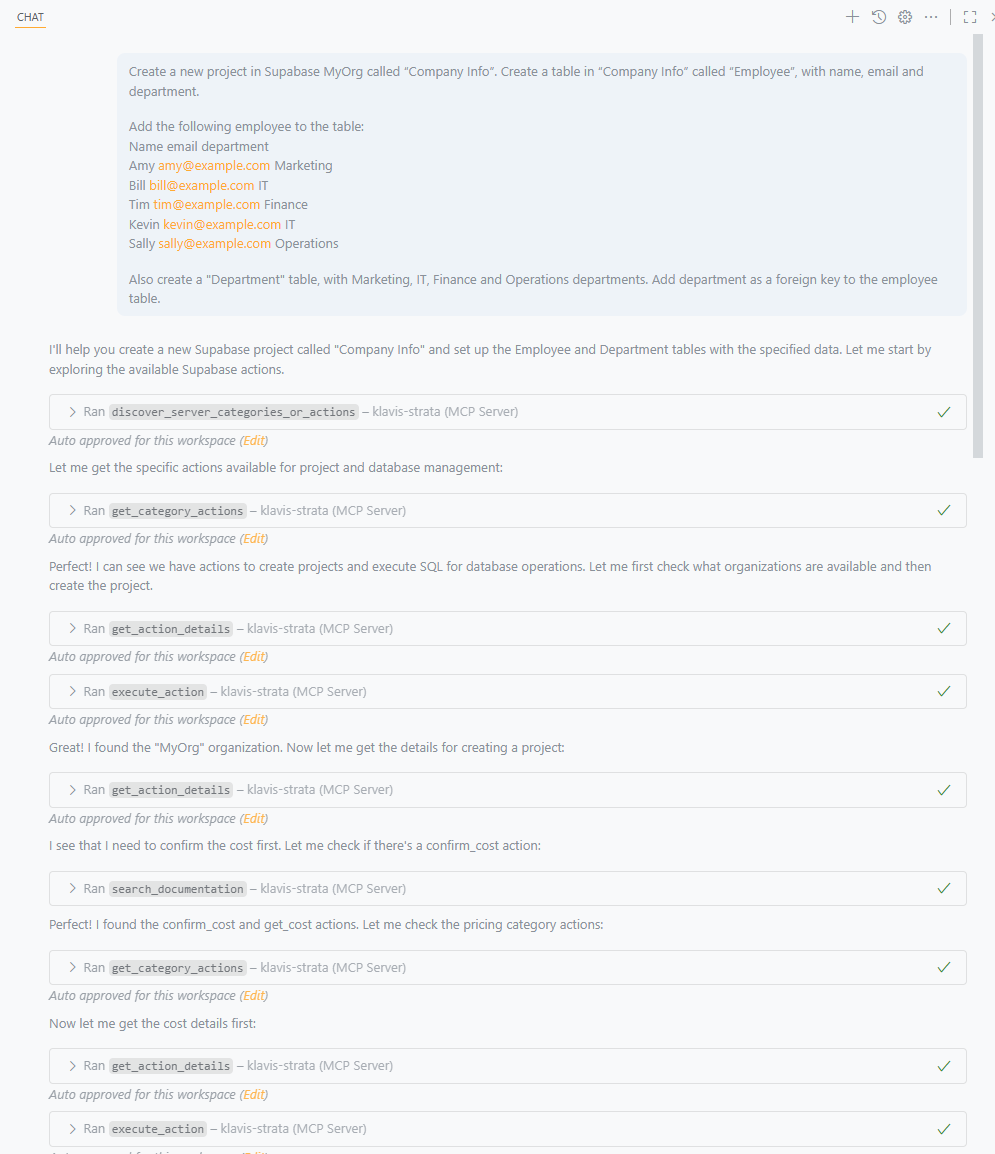

Now that we have connected Supabase to LLMs via Klavis MCP, let's explore some practical examples of what we can achieve with this integration. We will showcase a few real examples (with prompts) of using Strata Supabase MCP with LLMs. Here we demonstrate with VS Code connecting to Claude Sonnet 4 model.

Example 1: Create table and insert data

We can create a new table and insert data into it using natural language prompts.

Prompt:

Create a new project in Supabase MyOrg called “Company Info”. Create a table in “Company Info” called “Employee”, with name, email and department.

Add the following employee to the table: Name email department Amy amy@example.com Marketing Bill bill@example.com IT Tim tim@example.com Finance Kevin kevin@example.com IT Sally sally@example.com Operations

Also create a "Department" table, with Marketing, IT, Finance and Operations departments. Add department as a foreign key to the employee table.

LLM response:

We can see the model is executing the tasks guided by Strata progressively discover method:

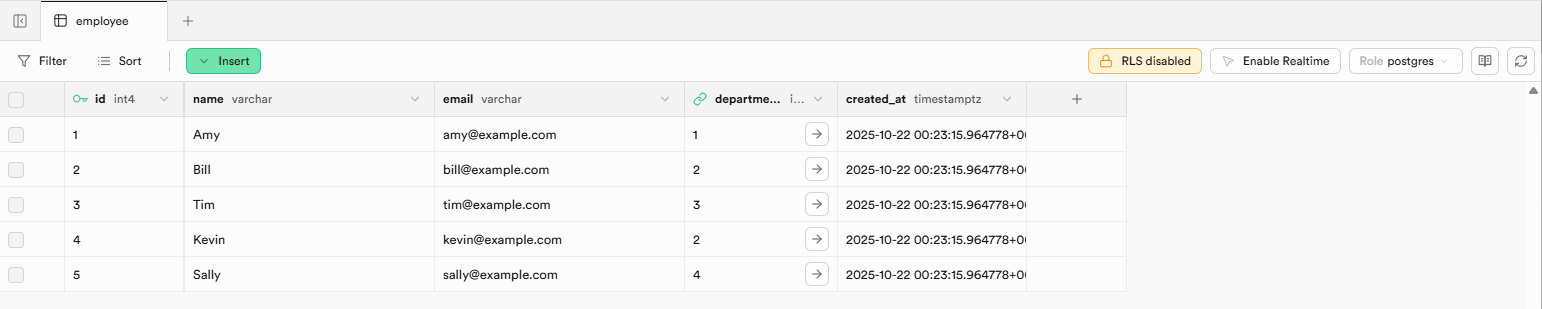

Result:

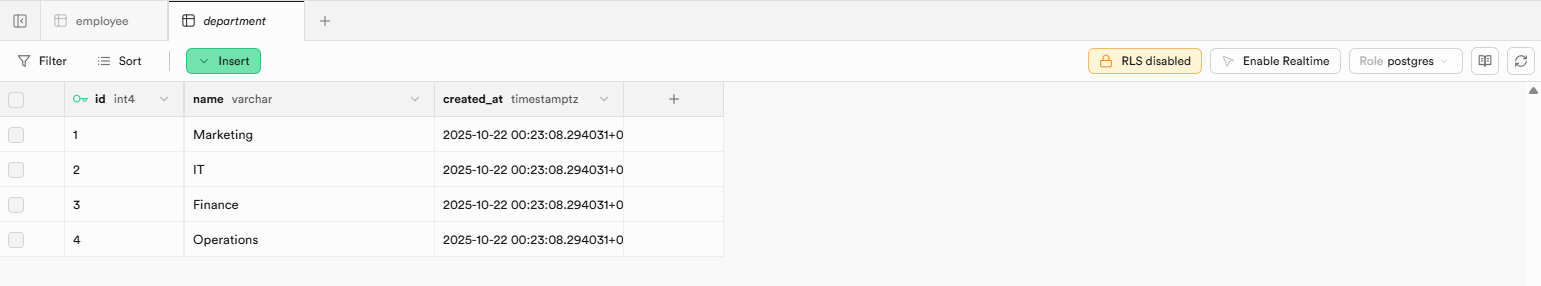

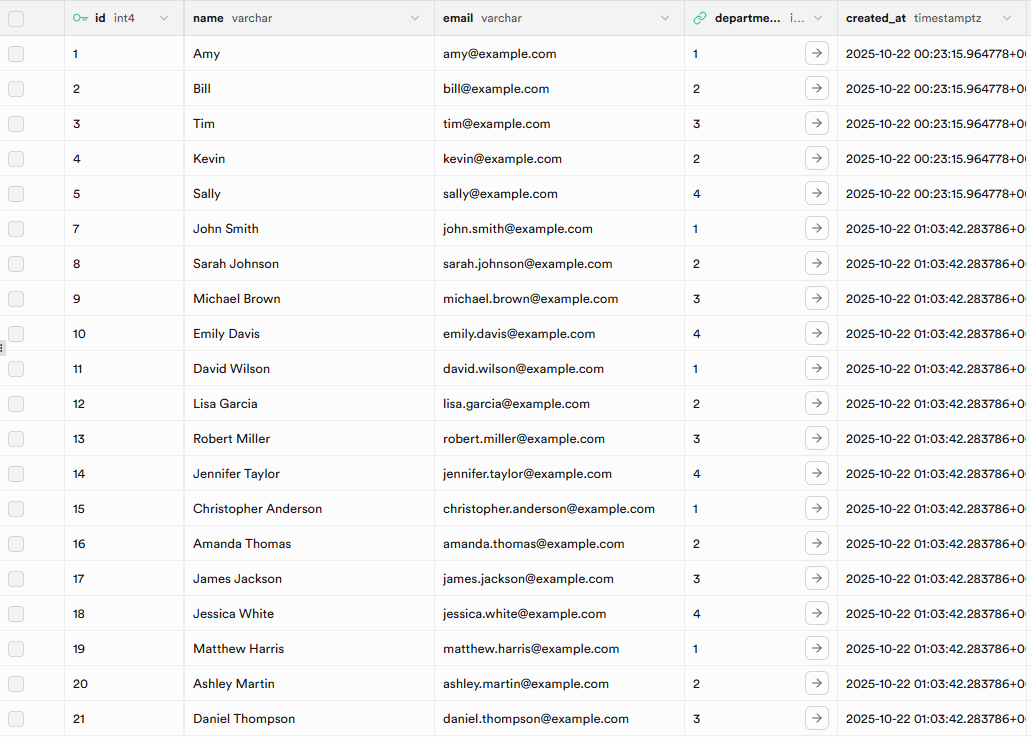

We can see the 2 tables are created in Supabase with the data inserted:

Example 2: Query and trigger actions based on data

We want to query the number of employees in each department. We also want to add a number of people column in the department table. And a trigger is needed to update this column when employees table are changed.

Prompt:

Add a column in department table called "number_of_people". It should be a SQL or trigger counting the actual number of people in each department.

Result:

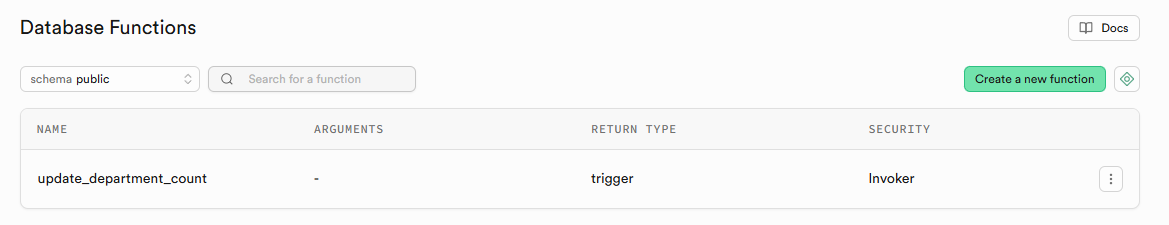

We can see the triger function is created in Supabase:

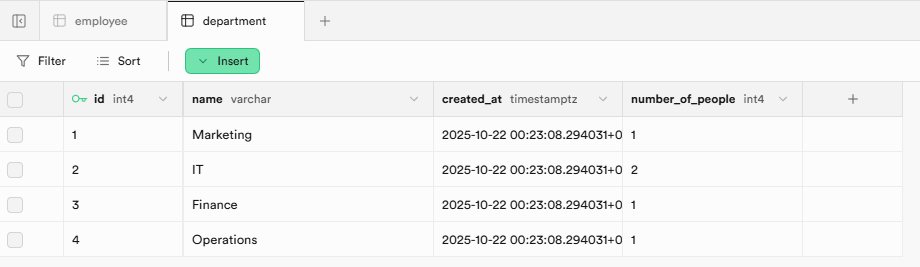

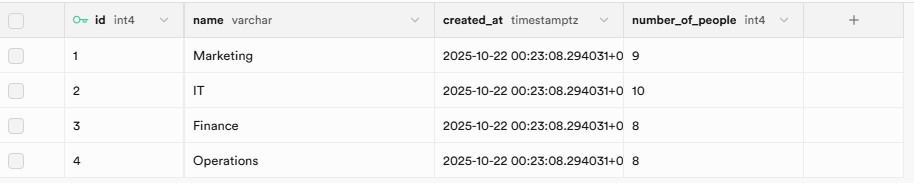

And the department table is updated with the number of people column:

Example 3: Integrate with other data sources

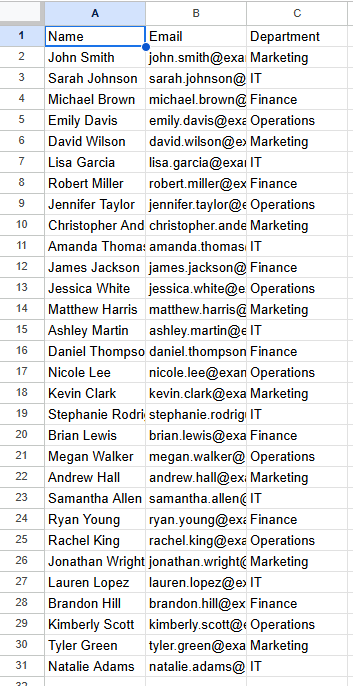

Since Strata MCP supports connecting multiple applications, we can also make Supabase work with other data sources. Here, we can connect Supabase with Google Sheets via Klavis MCP. We dump the employee data from Google Sheets to the Supabase employee table.

Prompt:

Dump "Test Employee" google sheet data to the supabase "Company Info" project "Employee" table.

Result:

We can see the data from Google Sheets is inserted into the Supabase employee table:

And the department table is also updated accordingly with the trigger we created before:

Conclusion

In this article, we have demonstrated how to integrate Supabase with LLMs using Klavis Strata MCP. We have also showcased several practical examples of what can be achieved with this integration, including creating tables, inserting data, querying data, and integrating with other data sources. With the power of LLMs and the flexibility of Supabase, the possibilities are endless for building intelligent applications that leverage your data effectively.

FAQs

1. Is this approach secure? Yes. By using a managed MCP server with OAuth, you avoid handling long-lived database credentials directly within the LLM's prompt or your application code. The AI operates on a principle of least privilege, only accessing the specific tools and data permitted by the authenticated user's token.

2. Can I use this with Supabase's vector search (pgvector)? Absolutely. The supabase_execute_sql tool is your gateway to pgvector, which you can find on its GitHub repository. You can execute any valid PostgreSQL query, including vector similarity searches using operators like <=>. This makes it the ideal architecture for building advanced RAG applications on top of your existing Supabase data.

3. Do I need to manually define the database schema for the LLM? No. As shown in the Business Analyst example, a key advantage is using tools like supabase_list_tables and supabase_list_columns. This allows the AI agent to dynamically explore and understand your database schema before constructing a query, which dramatically reduces errors and hallucinations.